Abstract

In this paper, we propose a novel domain-specific dataset named VegFru for fine-grained visual categorization (FGVC). While the existing datasets for FGVC are mainly focused on animal breeds or man-made objects with limited labelled data, VegFru is a larger dataset consisting of vegetables and fruits which are closely associated with the daily life of everyone. Aiming at domestic cooking and food management, VegFru categorizes vegetables and fruits according to their eating characteristics, and each image contains at least one edible part of vegetables or fruits with the same cooking usage. Particularly, all the images are labelled hierarchically. The current version covers vegetables and fruits of 25 upper-level categories and 292 subordinate classes. And it contains more than 160,000 images in total and at least 200 images for each subordinate class. Accompanying the dataset, we also propose an effective framework called HybridNet to exploit the label hierarchy for FGVC. Specifically, multiple granularity features are first extracted by dealing with the hierarchical labels separately. And then they are fused through explicit operation, \eg, Compact Bilinear Pooling, to form a unified representation for the ultimate recognition. The experimental results on the novel VegFru, the public FGVC-Aircraft and CUB-200-2011 indicate that HybridNet achieves one of the top performance on these datasets.

1. VegFru

1.1 Overview

Figure 1: Sample images in VegFru. Top: vegetable images. Bottom: fruit images. Best viewed electronically.

Currently, the dataset covers vegetables and fruits of 25 upper-level categories (denoted as sup-class) and 292 subordinate classes (denoted as sub-class), which has taken in all species in common. It contains more than 160,000 images in total and at least 200 images for each sub-class, which is much larger than the previous fine-grained datasets. Particularly, besides the fine-grained annotation, the images in VegFru are assigned hierarchical labels. And compared to the vegetable and fruit subsets of ImageNet, the taxonomy adopted by VegFru is more popular for domestic cooking and food management, and the image collection strictly serves this purpose, making each image in VegFru contain at least one edible part of vegetables or fruits with the same cooking usage. Some sample images are shown in Figure 1.

1.2 Building Principles

Aiming at domestic cooking and food management, VegFru is constructed according to the following principles.

- The objects in the images of each sub-class have the same cooking usage.

- Each image contains at least one edible part of vegetables or fruits.

- The images that contain different edible parts of a certain vegetable or fruit, \eg, leaf, flower, stem, root, are classified into separate sub-class.

- Even for the images that contain the same edible part of given vegetable or fruit, if the objects are different in cooking, we also classify them into different sub-class.

- The objects in each image should be the raw food materials. If the raw materials of cooked food can not be made out, the images will be removed.

1.3 VegFru vs ImageNet subsets

Table 1: VegFru vs ImageNet subsets on dataset structure.#Sup-the number of sup-classes. #Sub-the number of sub-classes. Min/Max-the minimum/maximum number of images in each sub-class. #Sub<200-the number of sub-classes that consist of less than 200 images.

1.4 VegFru vs Fine-grained Datasets

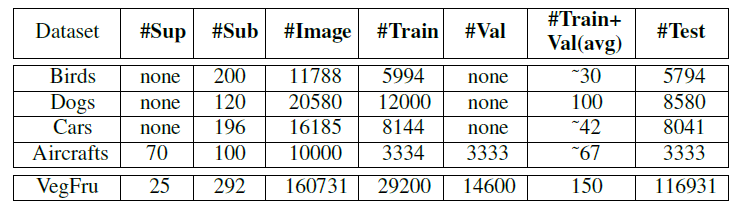

Table 2: VegFru vs Fine-grained Datasets.#Sup-the number of sup-classes. #Sub-the number of sub-classes. #Image-the number of images in total. #Train/#Val/#Test-the number of images in train/val/test set. #Train+Val(avg)-the average number of images in each sub-classes for model training (include train and val set).

2.HybridNet

2.1 Network Architecture

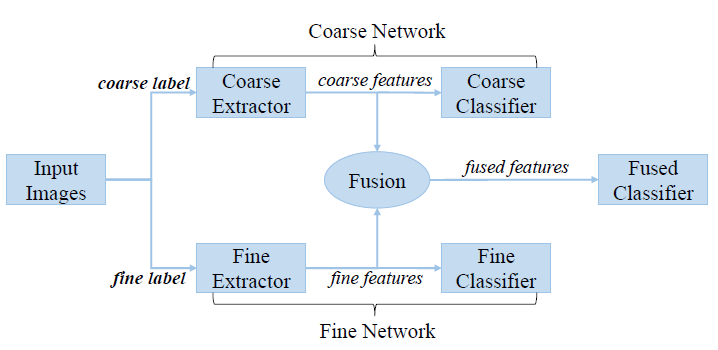

Figure 2: Illustration of the proposed HybridNet. Two-stream features which deal with the hierarchical labels are first extracted separately, and then sent through the Fusion module to train the Fused Classifier for overall classification.

2.2 Training Strategy

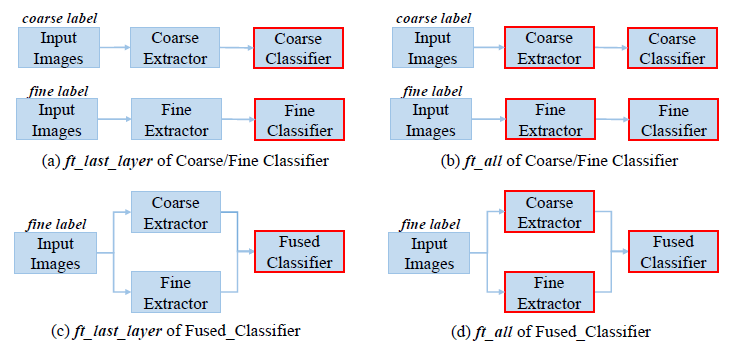

Figure 3: Training strategy of HybridNet. Inspired by the training of CBP-CNN, the training of the Fused Classifier is also divided into two stages following the same denotations ((c)(d)). The Fusion moduels in (c)(d) are omitted for simplicity. In each stage, only the components surrounded by the red rectangle are finetuned with the rest fixed. Best viewed electronically.

2.3 Performance Comparison

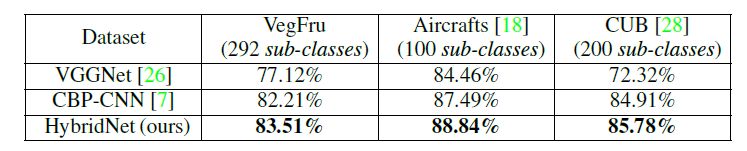

Table 3: Performance comparison for HybridNet. To keep the experiments consistent, HybridNet is trained on the train set of VegFru. And it is trained on the trainval set of FGVC-Aircraft and train set of CUB-200-2011. Finally, all results are evaluated on the test set and reported in the top-1 mean accuracy.

3. Download Links

Download Links: ![]() [Paper.pdf]

[Paper.pdf]![]() [Supplementary Material.pdf][Github][Download VegFru Dataset]

[Supplementary Material.pdf][Github][Download VegFru Dataset]

Please cite the following paper if you use VegFru or HybridNet in your publication:

@InProceedings{Hou2017VegFru,

Title = {VegFru: A Domain-Specific Dataset for Fine-grained Visual Categorization},

Author = {Saihui Hou, Yushan Feng and Zilei Wang},

Booktitle = {IEEE International Conference on Computer Vision},

Year = {2017}

}